Creating interactive music tools on the web is an exciting way to engage users with your content and make sound production accessible to everyone. The Web Audio API is a powerful tool built into modern web browsers that allows developers to create, process, and manipulate audio directly in the browser using JavaScript. In this tutorial, we'll explore how to utilize the Web Audio API to build your own interactive music tool.

Getting Started with the Web Audio API

To begin creating audio, you'll need to set up an audio context. The AudioContext provides the starting point for all your audio processes.

const audioContext = new (window.AudioContext || window.webkitAudioContext)();

This line of code initializes an AudioContext. If the browser supports the experimental webkit version, it will also handle older WebKit browsers.

Creating Audio Sources

With an audio context set up, you can start creating audio sources. A common type of source is the oscillator node, which is perfect for generating simple waveforms.

const oscillator = audioContext.createOscillator();

oscillator.type = 'sine'; // Sine wave

oscillator.frequency.setValueAtTime(440, audioContext.currentTime); // A440

oscillator.connect(audioContext.destination);

oscillator.start();

This code snippet creates an oscillator, sets it to produce a sine wave at 440Hz (concert pitch 'A'), and then connects it to the audio destination (i.e., your speakers).

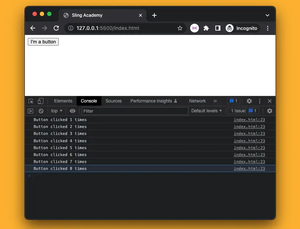

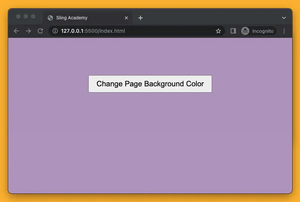

Adding User Interaction

One of the appealing aspects of building a music tool is interactivity. Let's allow the user to control the frequency of the oscillator using a range input slider.

<input id="frequency-slider" type="range" min="220" max="880" value="440">

document.getElementById('frequency-slider').addEventListener('input', function(event) {

const frequency = event.target.value;

oscillator.frequency.setValueAtTime(frequency, audioContext.currentTime);

});

This HTML and JavaScript combo lets users adjust the frequency of the oscillator from 220Hz to 880Hz using a slider.

Adding Effects with Filters

To enhance the audio, you can apply filters. Below is an example of a low-pass filter, which allows frequencies below a specified cutoff frequency to pass through:

const filter = audioContext.createBiquadFilter();

filter.type = 'lowpass';

filter.frequency.setValueAtTime(1000, audioContext.currentTime); // Initial cutoff frequency

oscillator.connect(filter);

filter.connect(audioContext.destination);

In this snippet, the filter is connected to the oscillator, giving our sound that low-pass effect.

Managing the Audio Lifecycle

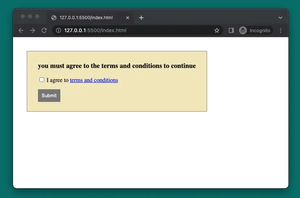

Developers need to manage when sounds start and stop properly. Initiating audio when the page loads might not feel engaging; instead, use a button to control when sounds are generated.

<button id="start-button">Start Sound</button>

const startButton = document.getElementById('start-button');

function startSound() {

oscillator.start();

}

startButton.addEventListener('click', startSound);

It's good practice to release audio resources correctly to optimize performance. Ensure you stop and disconnect when users are done interacting.

function stopSound() {

oscillator.stop();

oscillator.disconnect();

}

// Example call of stopSound()

// stopSound(); // Call this when needed

This function gracefully stops and disconnects the oscillator. Use similar logic to manage other sound nodes and resources.

Conclusion

The Web Audio API opens up endless possibilities for web-based music tools and applications. With complete control over the audio graph, and tools for creating rich interactive applications, developers can build engaging user experiences. Start by setting up an audio context, explore oscillators and filters, and expand by incorporating user interactions and controls to make the work come alive.