Voice-activated interfaces offer a hands-free way for users to interact with web applications, enhancing accessibility and providing an alternative to traditional input methods. In this article, we'll explore how to create voice-activated web applications using the Web Speech API in JavaScript.

Introduction to Web Speech API

The Web Speech API provides two main interfaces: SpeechSynthesis for converting text to speech and SpeechRecognition for recognizing voice input. Our focus will be on SpeechRecognition to enable voice commands in web applications.

Getting Started with SpeechRecognition

SpeechRecognition is available in most modern browsers except Safari, which implements it as Apple’s Siri Speech API. Here’s how you can set up SpeechRecognition in JavaScript:

if (!('webkitSpeechRecognition' in window)) {

console.log('SpeechRecognition is not supported by your browser.');

} else {

var SpeechRecognition = SpeechRecognition || webkitSpeechRecognition;

var recognition = new SpeechRecognition();

recognition.onstart = function() {

console.log('Voice recognition started. Try speaking into the microphone.');

};

recognition.onresult = function(event) {

console.log('Result received: ', event.results[0][0].transcript);

};

recognition.onerror = function(event) {

console.log('Error occurred in recognition: ' + event.error);

};

recognition.onend = function() {

console.log('Voice recognition ended.');

};

}

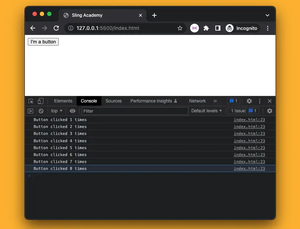

This script sets up basic speech recognition that logs results to the console and handles errors and lifecycle events like onstart and onend.

Configuring SpeechRecognition

The SpeechRecognition API allows configuring several parameters:

recognition.continuous: Makes API listen continuously after the user stops speaking.recognition.lang: Sets the language; for example, set as'en-US'for English.recognition.interimResults: When true, returns interim results enabling applications to process speech before the user stops talking.

recognition.continuous = true;

recognition.lang = 'en-US';

recognition.interimResults = false;

Handling SpeechRecognition Results

Processing the recognized speech is crucial. Here’s an example extending the onresult event handler to take actions based on recognized word or phrases:

recognition.onresult = function(event) {

var speechResult = event.results[0][0].transcript;

console.log('Speech recognized: ', speechResult);

if (speechResult.includes('blue')) {

document.body.style.backgroundColor = 'blue';

} else if (speechResult.includes('red')) {

document.body.style.backgroundColor = 'red';

} else {

console.log('Color not recognized, keeping default.');

}

};

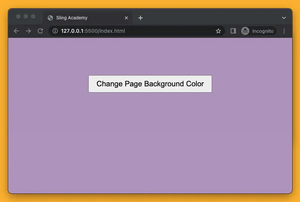

With this snippet, if the user speaks the word "blue" or "red", the background color of the document changes accordingly.

Conclusion

Creating voice-activated interfaces can significantly enhance user experiences, making it a wonderful addition to any modern web application. By harnessing the power of the Web Speech API in JavaScript, developers can create interactive, voice-controlled applications quickly and easily. Because browser support can be variable, always ensure to provide alternatives for users when voice recognition is not supported.

Continue to experiment and expand your project by creating voice commands for different interactions, improving the functionality and accessibility of your application.

Happy coding!