With the advent of touch screens, detecting gestures and swipes in web applications has become incredibly important for creating interactive experiences. Leveraging JavaScript Touch APIs, developers can detect and handle touch movements such as swipes, taps, and pinches. Here’s a detailed guide on how to utilize these APIs for gesture detection.

Understanding Touch Events in JavaScript

Touch events in JavaScript are designed to handle touch input from users. The primary touch events you’ll encounter are:

- touchstart: Triggered when a finger touches the screen.

- touchmove: Triggered when a finger moves around on the screen.

- touchend: Triggered when a finger leaves the screen.

- touchcancel: Triggered when a touch event is canceled.

Implementing Basic Touch Event Listeners

To capture touch gestures, you need to set up event listeners for these touch events. Here's an example of attaching touch event listeners to an HTML element:

const touchArea = document.getElementById('touchArea');

touchArea.addEventListener('touchstart', handleTouchStart, false);

touchArea.addEventListener('touchmove', handleTouchMove, false);

touchArea.addEventListener('touchend', handleTouchEnd, false);

Detecting a Swipe Gesture

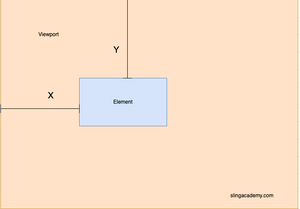

Detecting a swipe involves noting the start position and end position of the touch on the touchArea. We can calculate the direction and distance of the swipe from this data. Let’s implement a simple swipe detection:

let xDown = null;

let yDown = null;

function handleTouchStart(evt) {

const firstTouch = evt.touches[0];

xDown = firstTouch.clientX;

yDown = firstTouch.clientY;

}

function handleTouchMove(evt) {

if (!xDown || !yDown) {

return;

}

const xUp = evt.touches[0].clientX;

const yUp = evt.touches[0].clientY;

const xDiff = xDown - xUp;

const yDiff = yDown - yUp;

if (Math.abs(xDiff) > Math.abs(yDiff)) {

if (xDiff > 0) {

/* left swipe */

console.log('Swipe Left');

} else {

/* right swipe */

console.log('Swipe Right');

}

} else {

if (yDiff > 0) {

/* up swipe */

console.log('Swipe Up');

} else {

/* down swipe */

console.log('Swipe Down');

}

}

xDown = null;

yDown = null;

}

function handleTouchEnd(evt) {

// Reset values or handle end of touch event

console.log('Touch ended');

}

Advanced Gesture Detection

Beyond simple swipes, you might want to detect more complex gestures like pinch-to-zoom. This typically involves handling multiple touch points. While JavaScript by itself can process these gestures, libraries like Hammer.js simplify the process significantly.

To detect a pinch, you would calculate the distance between two fingers and monitor how it changes:

// Assuming multi-touch starts in 'handleTouchStart'

let initialDistance = null;

touchArea.addEventListener('touchmove', (evt) => {

if (evt.touches.length === 2) {

const x0 = evt.touches[0].clientX;

const y0 = evt.touches[0].clientY;

const x1 = evt.touches[1].clientX;

const y1 = evt.touches[1].clientY;

const dx = x1 - x0;

const dy = y1 - y0;

const distance = Math.sqrt(dx * dx + dy * dy);

if (initialDistance == null) {

initialDistance = distance;

} else {

const change = distance - initialDistance;

if (Math.abs(change) > 10) {

console.log(change > 0 ? 'Pinch Zoom Out' : 'Pinch Zoom In');

initialDistance = distance; // reset initial distance

}

}

}

}, false);Conclusion

Working with touch inputs requires an understanding of how users interact with devices. By implementing these basic touch events and understanding how to track finger movements, developers can create immersive, intuitive web interfaces that go beyond traditional point-and-click interactions.

Further optimizations and gesture detections can be achieved with specialized libraries, depending on your application's needs.