Overview

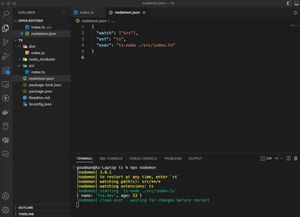

Working with CSV files is a common task in software development, and Node.js makes it simple to read from and write to these files with both built-in modules and community-driven packages. CSV, or Comma-Separated Values, is a straightforward file format used to store tabular data. In this tutorial, we’ll explore different methods to handle CSV files in Node.js, starting with basic examples and moving to more advanced scenarios.

We will start by using the native ‘fs’ module for basic operations and then look at how we can leverage powerful third-party libraries like ‘csv-parser’ and ‘fast-csv’ to handle more complex CSV tasks.

Reading CSV Files

Let’s start with reading a CSV file using the ‘fs’ module:

const fs = require('fs');

fs.readFile('/path/to/your/file.csv', 'utf8', (err, data) => {

if (err) {

console.error('Error reading the file', err);

return;

}

const rows = data.split('\n').slice(1);

rows.forEach((row) => {

const columns = row.split(',');

// Handle each column of each row

});

});

This example shows how you can read a CSV file as a string, split it by lines, and then split each line by commas to access individual cells.

Writing CSV Files

To write data to a CSV file, we’ll use the ‘fs’ module again:

const fs = require('fs');

const data = [

['name', 'age', 'email'],

['Alice', '23', '[email protected]'],

// ... more data

];

let csvContent = data.map(row => row.join(',')).join('\n');

fs.writeFile('/path/to/your/output.csv', csvContent, 'utf8', (err) => {

if (err) {

console.error('Error writing the file', err);

}

});

This basic example takes an array of arrays, joins each inner array into a CSV string, and writes the result to a file.

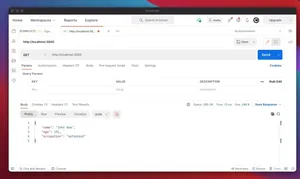

Advanced Reading with ‘csv-parser’

For more advanced CSV reading, we can use the ‘csv-parser’ library, which handles various edge cases and provides a stream-friendly interface:

const fs = require('fs');

const csv = require('csv-parser');

fs.createReadStream('/path/to/your/file.csv')

.pipe(csv())

.on('data', (row) => {

console.log(row);

})

.on('end', () => {

console.log('CSV file successfully processed');

});

This will read the CSV file line by line, parsing each row into a JavaScript object based on the headers.

Advanced Writing with ‘fast-csv’

To write CSV files more efficiently, you can use the ‘fast-csv’ library. It provides a flexible API to format data and write it to a writable stream:

const fs = require('fs');

const fastCsv = require('fast-csv');

const data = [{ name: 'Alice', age: '23', email: '[email protected]' }, /* more data */ ];

const ws = fs.createWriteStream('/path/to/your/output.csv');

fastCsv

.write(data, { headers: true })

.pipe(ws);

The ‘fast-csv’ library will take care of converting the array of objects into a CSV format, including headers.

Working with Large CSV Files

When working with large CSV files, it is important to process the data in chunks to avoid loading the entire file into memory. Node.js streams are perfect for this task:

// Example omitted for brevity, but it would include handling streams for both reading and writing large files

By using streams, we can read and write large CSV files line by line, processing and transferring data in a memory-efficient manner.

Error Handling

Error handling is crucial when dealing with file systems. Always listen for errors on your streams and handle them appropriately:

// Example omitted for brevity, but it would include proper error handling with stream events

Any I/O operation can fail, and your applications must be prepared to handle such cases gracefully.

Conclusion

In summary, Node.js provides great tools and libraries for reading from and writing to CSV files. Starting with simple file operations using the ‘fs’ module, we can move to more powerful, stream-based solutions for handling larger datasets and more complex requirements. Remember to handle I/O operations with proper error handling to ensure the robustness of your applications. With the patterns and libraries discussed, you are now equipped to manage CSV data effectively in your next Node.js project.