Introduction

Streaming large files in NestJS can significantly improve memory efficiency by handling data in chunks rather than loading entire files into memory. This tutorial guides you through implementing streaming for handling large files in a NestJS application.

Getting Started with Streaming

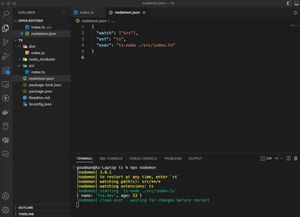

Before streaming files, ensure that NestJS is installed. You can create a new project using the Nest CLI with nest new project-name. Let’s begin by setting up a simple route to stream a large file:

import { Controller, Get, Res } from '@nestjs/common';

import { Response } from 'express';

@Controller('files')

export class FilesController {

@Get('stream')

streamFile(@Res() res: Response) {

const filePath = 'path/to/your/large/file.txt';

const fs = require('fs');

const readStream = fs.createReadStream(filePath);

readStream.pipe(res);

}

}

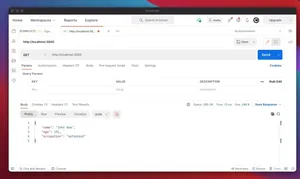

This controller provides a GET endpoint at /files/stream that streams a file to the client.

Improving Streaming with Proper Content Types

Next, ensure the response has the correct MIME type. Update your streamFile method to include this:

//... previous code

@Get('download')

downloadFile(@Res() res: Response) {

const filePath = 'path/to/your/large/file.txt';

res.setHeader('Content-Type', 'text/plain');

const readStream = fs.createReadStream(filePath);

readStream.pipe(res);

}

//... remaining code

By setting the ‘Content-Type’ header, you ensure the client interprets the file correctly.

Handling Errors in Streams

To handle potential errors during the file streaming process, such as a missing file, you should attach an error listener to the read stream:

//... previous code

streamFileWithErrors(@Res() res: Response) {

//... file path and header setup as before

const readStream = fs.createReadStream(filePath);

readStream.on('error', error => {

res.status(404).send('File not found');

});

readStream.pipe(res);

}

//... remaining code

This method sends a 404 error to the client if the file cannot be found or read.

Streaming and Transforming Data with Streams

For advanced scenarios, you can transform the data as it’s being streamed. For instance, here is how you could uppercase text data:

import { Transform } from 'stream';

//... previous code

@Get('uppercase')

uppercaseFileStream(@Res() res: Response) {

//... set up path and headers

const uppercaseTransform = new Transform({

transform(chunk, encoding, callback) {

this.push(chunk.toString().toUpperCase());

callback();

}

});

const readStream = fs.createReadStream(filePath);

readStream.pipe(uppercaseTransform).pipe(res);

}

//... remaining code

This method wraps the read stream with a custom uppercase transform stream before piping it to the response.

Stream Back Pressure Management

Managing back pressure is crucial when the data generation rate overwhelms the consuming speed. The following is an approach to handle such scenarios:

//... previous code

// Include 'pause' and 'resume' stream events

readStream.on('data', (chunk) => {

if (!res.write(chunk)) { // Write returns false when back pressure builds

readStream.pause();

}

});

res.on('drain', () => { // 'drain' event when the buffer empties

readStream.resume();

});

readStream.on('end', () => {

res.end();

});

//... remaining code

This code manages streaming large files by respecting flow control between source and destination streams.

Conclusion

In this tutorial, we covered how to handle large file streams in NestJS, ensuring efficient memory usage and building scalable applications. Implementing streaming requires understanding the Node.js streams API and properly handling possible edge cases such as errors and back pressure.