In modern web development, the ability to integrate real-time audio and video (AV) capture into web applications has become increasingly valuable. This functionality can enhance user engagement with features like video calls, live streaming, and video conferencing. This article provides a detailed guide on how to implement real-time AV capture in your web apps using JavaScript.

Using the getUserMedia API

The heart of real-time audio and video capture in web apps is the getUserMedia API. This API allows web applications to access a user's camera and microphone with their permission. The API is part of the WebRTC project, making it a standardized way to handle multimedia streaming.

Basic Usage

The getUserMedia API is accessible through the navigator.mediaDevices object. Here’s a simple example of how to access the user's video stream:

navigator.mediaDevices.getUserMedia({ video: true, audio: true })

.then(stream => {

const videoElement = document.querySelector('video');

videoElement.srcObject = stream;

videoElement.onloadedmetadata = () => {

videoElement.play();

};

})

.catch(error => {

console.error('Error accessing media devices.', error);

});

In the above code, we request both video and audio streams. Once a stream is successfully obtained, it's assigned to the srcObject property of a video element, which plays the stream.

Handling User Permissions

Getting permissions for AV capture is critical. When the getUserMedia method is called, the browser prompts the user to allow access to their devices. You need to handle scenarios where users deny access or when an error occurs:

// Handle user denying permissions

.catch(error => {

if (error.name === 'NotAllowedError') {

alert('Permissions to access the camera and microphone have been denied.');

} else {

console.error('getUserMedia error:', error);

}

});

Processing the Media Stream

Once you have a media stream, you can process it for different functionalities, such as applying filters, capturing snapshots, or even broadcasting to other users via WebRTC. Here’s a basic snapshot capture from the video stream:

function captureSnapshot(videoElement, canvasElement) {

const context = canvasElement.getContext('2d');

context.drawImage(videoElement, 0, 0, canvasElement.width, canvasElement.height);

// Save snapshot as an image

const dataURL = canvasElement.toDataURL('image/png');

console.log(dataURL);

}

// Example: Call this when a "capture" button is clicked

const captureButton = document.querySelector('#captureButton');

captureButton.addEventListener('click', () => {

const videoElement = document.querySelector('video');

const canvasElement = document.querySelector('canvas');

captureSnapshot(videoElement, canvasElement);

});

The above function copies the current video frame to a canvas where you can manipulate it further or convert it to an image format.

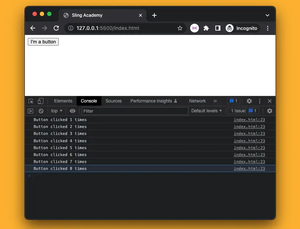

Error Handling and Debugging

Debugging AV functionalities can be complex due to reliance on hardware capabilities and user permissions. Always keep the console open and log errors to understand what might be going wrong. Consider implementing UI feedback to inform users of any issues during the media acquisition process.

By following the instructions in this article, developers can effectively integrate real-time audio and video capture into their web applications, enhancing interactivity and providing functionalities that meet today’s digital communication demands. Keep experimenting with other features of the WebRTC API to further enrich your application experience.