When you evolve your application, your database schema may need to change. This tutorial provides a step-by-step guide on how to migrate data in Mongoose after altering your schema so your application stays consistent and robust.

Getting Started

Moving along with your Mongoose app development, you’ve probably reached a point where your initial schema design isn’t quite keeping up with your application’s requirements. Maybe you’ve added a new feature, or you need to optimize your data structure for performance reasons. Whatever the cause, you need to migrate your database to a new schema without losing data or experiencing significant downtime.

Before you begin, make sure to backup your database. This will allow you to restore your previous state should anything go wrong during the migration process.

Understanding Schema Evolution in Mongoose

Mongoose schemas are the blueprints for your documents in MongoDB. When you need to change a schema, you can often make simple additions by augmenting the existing schema. However, more complex changes, like renaming fields or changing field types, usually require a migration script.

Also, understand that Mongoose middleware (pre/post hooks) can affect how documents are saved or manipulated, potentially complicating migrations.

Incremental Migration

An incremental migration strategy helps in minimizing downtime. This approach involves running a background process that transfers data from the old schema to the new schema over time.

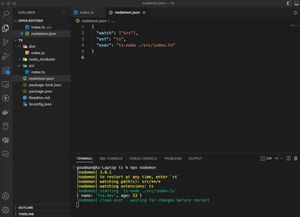

const mongoose = require('mongoose');

// Connect to the MongoDB database

mongoose.connect('mongodb://localhost/mydatabase', {useNewUrlParser: true, useUnifiedTopology: true});

// Old Schema

const OldItemSchema = new mongoose.Schema({

name: String,

detail: String,

createdAt: Date

});

const OldItem = mongoose.model('OldItem', OldItemSchema);

// New Schema

const NewItemSchema = new mongoose.Schema({

title: String, // renamed from 'name'

description: String, // renamed from 'detail'

createdOn: Date // new field

});

const NewItem = mongoose.model('NewItem', NewItemSchema);

This code snippet shows the process of initializing two schemas: the old and the new one. To implement an incremental migration, a script will iterate through documents in the OldItem collection and create corresponding documents in the NewItem collection with the adjusted field names.

Writing the Migration Script

The heart of your migration will be a script that systematically goes through your existing documents, translates them to the new schema, and saves them. It’s wise to batch this process to avoid memory issues, especially with large datasets.

Here’s a basic example script:

async function migrateData() {

const cursor = OldItem.find().cursor();

for (let doc = await cursor.next(); doc != null; doc = await cursor.next()) {

const newData = {

title: doc.name,

description: doc.detail,

createdOn: doc.createdAt || new Date(),

};

const newItem = new NewItem(newData);

await newItem.save();

}

}

migrateData().then(() => {

console.log('Migration completed.');

}).catch(err => {

console.error('Migration failed:', err);

});

This script creates a cursor from the old collection, iterates over each document, and saves a new document to the new collection with the appropriate transformations.

Handling Complex Transformations

Sometimes, your schema changes require more than simple renaming; you may have to split fields, combine them, or perform some complex logic to transform the data.

To handle a scenario like this, you will have to write a more sophisticated script. For this purpose, you can use async functions and Mongoose’s query methods to help you apply the required operations in bulk.

Consider an old schema where an address field is a simple string, but you want to split it into a more structured format in the new schema containing distinct fields for street, city, and zip code. Here’s how you might write your migration script:

async function complexMigration() {

const batchLimit = 100; // Limit the number to avoid memory overflow

let offset = 0;

while (true) {

const oldItems = await OldItem.find().skip(offset).limit(batchLimit);

if (oldItems.length === 0) break;

for (const doc of oldItems) {

// Let's assume you can call a function to convert the old address

// string into an object with {street, city, zip}

const addressParts = parseAddress(doc.address);

const newData = {

...addressParts, // Spreading the parts object to match the new schema keys

title: doc.name,

description: doc.detail,

createdOn: doc.createdAt || new Date(),

};

const newItem = new NewItem(newData);

await newItem.save();

}

offset += batchLimit; // Prepare the offset for the next batch

}

}

complexMigration().then(() => {

console.log('Complex migration completed.');

}).catch(err => {

console.error('Complex migration failed:', err);

});

Breaking down the operations into batch processing helps to keep the resource usage within acceptable boundaries, and it reduces the risk of the script failing due to excessive memory consumption.

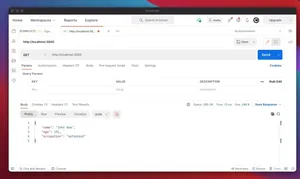

Validating the Migration

After running your migration, it’s important to verify that the data are transformed and saved correctly. You can use a combination of manual checks together with automated tests to ensure the integrity of the migrated data.

Creating a few queries to compare document counts or to check for the existence of newly required fields can help confirm the migration was successful. It’s important to check the data in the newly created documents to ensure that no data was corrupted or lost during migration.

Conclusion

In this tutorial, we have covered the basics of schema migrations in Mongoose, including incremental migration strategies and writing both simple and complex migration scripts. By understanding the fundamental concepts and applying best practices for data migration, you can evolve your database alongside your application without sacrificing data integrity or uptime. It’s always crucial to begin with a backup and end with thorough testing to ensure a smooth transition to your new schema.