Introduction

When working with MongoDB, particularly with large collections, performance and scalability are two pivotal concerns. Mongoose, a MongoDB object modeling tool designed to work in an asynchronous environment, adds a layer of convenience for CRUD operations on MongoDB. However, with very large collections, additional considerations must be taken to maintain efficient data handling. This article will guide you through techniques and best practices in Mongoose for working with very large data sets, with a focus on current Node.js and JavaScript/TypeScript syntax.

Efficient Schema Design

Before we deep dive into querying techniques, it’s crucial to start with an optimized schema structure. Applying practical schema designs such as embedded documents instead of populating, using lean documents, indexing, and sharding will lay the foundation for better performance.

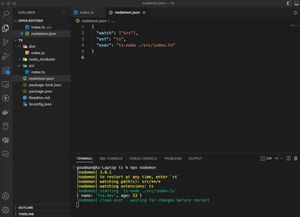

const userSchema = new mongoose.Schema({

name: String,

// Other fields

});

userSchema.index({ name: 1 }); // An ascending index on the 'name' field

const User = mongoose.model('User', userSchema);

We have added an ascending index on the name field. Indexes enhance query performance by allowing the database to find and sort through documents quickly.

Stream Large Datasets

One common technique when dealing with large datasets is to use streaming; this enables the processing of documents as they are being fetched rather than loading them all into memory at once.

const stream = User.find({}).stream();

stream.on('data', function (doc) {

// Process each document

}).on('error', function (err) {

// Handle error

}).on('end', function () {

// Finalize

});

Here we are utilizing the stream interface provided by Mongoose to process users as they come in. This helps to reduce memory footprint and prevent crashes that could occur when loading significant amounts of data at once.

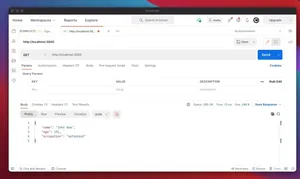

Paginate Large Result Sets

Another crucial technique to handle large amounts of data is pagination. It’s a method that serves only a subset of results at a time. Skipping and limiting can help in this regard.

async function getPaginatedResults(page, limit) {

const results = await User.find()

.skip((page - 1) * limit)

.limit(limit)

.exec();

return results;

}

We utilize asynchronous functions with ‘await’ for readability and to handle promises more cleanly than callback functions.

Handling Insertions Into Large Collections

Inserting documents efficiently into large collections can be handled by using bulk operations or the ordered insert technique. Mongoose provides the ‘insertMany’ method for this purpose.

const documents = [{ name: 'Alice'}, { name: 'Bob' }];

User.insertMany(documents, { ordered: false }, function (error, docs) {});

The ‘ordered: false’ option allows MongoDB to continue inserting documents even if one insert fails.

Optimizing Queries for Performance

Modifying your Mongoose queries to minimize workloads can yield significant performance benefits. Principles such as querying only the fields you need, using lean queries for read-only cases, and avoiding unnecessary computation in aggregation operations are key.

User.find({}, 'name age -_id').lean().exec((err, docs) => {

// You get plain JS objects, not Mongoose documents

});

It is important to incorporate modern JavaScript Promise chains and async/await syntactic sugar for cleaner and more robust code.

Conclusion

To effectively work with very large collections in Mongoose, it’s important to employ good schema design with proper indexing and lean query structures, use stream processing for handling voluminous datasets, paginate results, optimize insertions with bulk operations, and constantly refine queries. Implementing these best practices and techniques leads to efficient interactions with large MongoDB collections, ensuring scalability and responsiveness in production environments.