Overview

In modern web development, handling different data formats is an essential aspect, and often developers need to convert data from one format to another. JavaScript Object Notation (JSON) is a common data exchange format due to its text-based, highly readable nature, and its structure makes it easy to parse. On the other hand, the Comma-Separated Values (CSV) format is widely used in data import/export in spreadsheets and databases.

This tutorial guides you through converting JSON to CSV format in Node.js using various methods and libraries. We’ll start with a basic example and gradually move to more advanced scenarios to handle complex JSON structures. We will ensure the methods are in line with the latest JavaScript and Node.js features, like async/await, arrow functions, and ES modules.

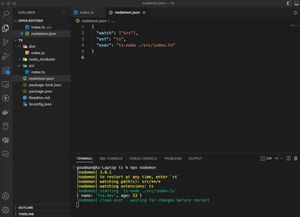

Simple Conversion Without Libraries

To convert JSON to CSV in its simplest form, you do not necessarily need a third-party library. Here’s a minimal example assuming you have an array of flat JSON objects:

import fs from 'fs';

const json = [{ name: "John Doe", age: 30 },{ name: "Jane Doe", age: 25 }];

const convertJSONToCSV = json => {

const header = Object.keys(json[0]).join(',');

const rows = json.map(obj =>

Object.values(obj).join(',')

).join('\n');

return `${header}\n${rows}`;

};

const csv = convertJSONToCSV(json);

fs.writeFile('output.csv', csv, err => {

if (err) throw err;

console.log('CSV file has been saved.');

});

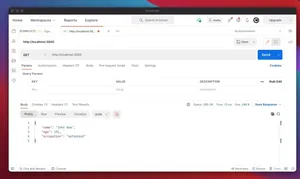

Using a Library

When dealing with more complex data structures or when you require additional features such as column selection, ordering, custom headers, or need to handle nested objects or arrays, using a dedicated CSV conversion library is advisable. One such popular library is ‘json2csv’.

Firstly, install json2csv by running:

npm install json2csvBelow is how you can use it:

import { Parser } from 'json2csv';

import fs from 'fs';

const json = [{ name: "John Doe", age: 30 },{ name: "Jane Doe", age: 25 }];

const fields = ['name', 'age'];

const opts = { fields };

try {

const parser = new Parser(opts);

const csv = parser.parse(json);

fs.writeFileSync('output.csv', csv);

console.log('CSV file saved successfully.');

} catch (err) {

console.error('Error converting JSON to CSV', err);

}

Advanced Conversion with json2csv

To handle more complex JSON data structures, such as nested objects and arrays, ‘json2csv’ allows for transforms and custom indicators to flatten the data. Here is an example of such a conversion:

// Other requires/imports stay the same

const json = [{ name: "John Doe", age: 30, address: { city: "New York" } },{ name: "Jane Doe", age: 25, address: { city: "Los Angeles" } }];

const fields = [

'name',

'age',

{

label: 'City',

value: 'address.city'

}

];

const opts = { fields };

// The rest remains the same as previously shown.

Streaming Large JSON Files

If you are working with large JSON files, it’s best to use streams to prevent memory overflows. Below is an example with ‘json2csv’ in a streaming context:

import { Transform } from 'stream';

import { Parser } from 'json2csv';

import fs from 'fs';

const jsonStream = fs.createReadStream('largeInput.json', { encoding: 'utf8' });

const csvStream = fs.createWriteStream('output.csv', { encoding: 'utf8' });

const json2csvTransform = new Transform({

objectMode: true,

transform(json, encoding, callback) {

try {

const parser = new Parser(opts);

callback(null, parser.parse(json));

} catch (err) {

callback(err);

}

}

});

jsonStream.pipe(json2csvTransform).pipe(csvStream).on('finish', () => {

console.log('Successfully processed large JSON to CSV.');

});

Conclusion

In this tutorial, we’ve explored different ways to convert JSON to CSV in Node.js, starting from a basic one without external libraries and gradually moving to using full-featured third-party libraries and handling complex structures and large datasets with streams. You can integrate these methods into your applications to manage data conversion according to your use cases seamlessly.