This article shows you some different ways to crawl webpages that render contents by using JavaScript frameworks like React, Vue, Angular, etc.

Puppeteer-Based Crawling

Puppeteer is a Node library which provides a high-level API to control Chrome or Chromium over the DevTools Protocol. Puppeteer is capable of handling dynamic JavaScript content on pages as it lauches a browser instance to perform real browser environment scraping.

The steps:

- Install Puppeteer via npm.

- Create a script to launch a Puppeteer controlled browser.

- Navigate to the target URL and wait for the necessary content to load.

- Extract the content using standard DOM queries.

- Close the browser instance.

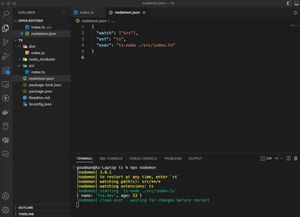

Code example:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com');

const content = await page.content(); // You can use page.$ or page.$ to query for elements

console.log(content);

await browser.close();

})();

Pros: Simulates a real user environment, handles JavaScript rendering. Cons: Performance-intensive, requires significant resources.

Headless Chrome with Rendertron

Rendertron is a dockerized, headless Chrome rendering solution which uses puppeteer to render and serve dynamically generated content for crawlers that do not handle JavaScript well.

The steps:

- Set up Rendertron middleware on your server or use a Rendertron service.

- Create a script using axios or another HTTP client to make requests to the Rendertron service.

- Parse the static HTML returned by Rendertron.

- Extract data using a library like cheerio that can interpret the static HTML content.

Code example:

const axios = require('axios');

const cheerio = require('cheerio');

(async () => {

const url = 'https://render-tron.appspot.com/render/https://example.com';

const response = await axios.get(url);

const $ = cheerio.load(response.data);

const content = $('selector').text(); // Replace 'selector' with the correct selector

console.log(content);

})();

Pros: Offloads processing to cloud service, can provide static HTML snapshots. Cons: Introduces a dependence on an external service, possible delays due to network overhead.

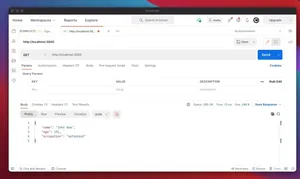

API Interception with axios and cheerio

In some cases, a website’s content dynamically loaded by JavaScript originates from a separate API. By reverse engineering the network requests, you can directly call these APIs, retrieve the content, and parse it without the need for a headless browser.

The process includes 4 steps:

- Analyze the network request made by the site to an API providing the dynamic content.

- Replicate these requests using an HTTP client like axios.

- Pass the received JSON response to a script for processing.

- Parse required content from the response.

Example:

const axios = require('axios');

(async () => {

const response = await axios.get('https://example-api.com/data');

const jsonData = response.data;

// Extract the data you need from jsonData

console.log(jsonData);

})();

Pros: Very performative solution, less resource-intensive. Cons: May not work on all sites, requires API endpoints to be known.

Conclusion

Crawling dynamically generated content using Node.js can be accomplished through multiple methods. Headless browsers like Puppeteer allow you to directly formalize a browsing experience, accurately capturing JavaScript-generated site content. Rendering services like Rendertron offer a centralized solution, offloading the heavy-lifting from your system. However, in situations where intercepted APIs can be used, this provides a performance advantage, though at the cost of adaptability and robustness. The choice of the right tool will greatly depend on the specifics needs and constraints of the project at hand.