Streams are an essential feature in Node.js that allow you to read or write data in a continuous flow. In Node.js, streams are instances of the EventEmitter class which facilitate handling of I/O operations. They are particularly useful when dealing with large volumes of data, or data that you get piece by piece, like reading a huge file or handling network communications. Streams can minimize memory usage and increase the performance of your application. In this tutorial, we will explore the basics of Node.js streams including how to use them with several examples.

The Fundamentals

Four Types of Streams

- Readable Streams: For reading data sequentially.

- Writable Streams: For writing data sequentially.

- Duplex Streams: For both reading and writing data.

- Transform Streams: A type of duplex streams where the output is computed from the input.

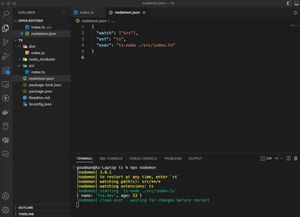

Creating a Readable Stream

Let’s begin by creating a simple readable stream. This is a basic operation where we read data from a stream source, such as a file.

const fs = require('fs');

const readableStream = fs.createReadStream('input.txt', 'utf8');

readableStream.on('data', function(chunk) {

console.log(chunk);

});

Creating a Writable Stream

Next, we create a writable stream, which allows us to write data to a destination, like a file:

const fs = require('fs');

const writableStream = fs.createWriteStream('output.txt');

writableStream.write('Hello Streams!', 'utf8');

writableStream.end();

When you call writableStream.end(), you signal that no more data will be written to the stream.

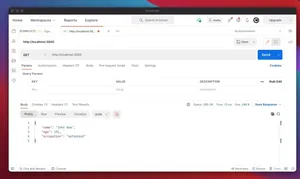

Piping Between Streams

Piping is a method to connect the output of one stream to another stream, and it’s common to pipe readable streams to writable ones:

readableStream.pipe(writableStream);

This will read data from input.txt and write it to output.txt.

Advanced Examples

Transforming Data with Streams

Transform streams let you modify data as it is written or read. Let’s create a transform stream that converts input to uppercase:

const { Transform } = require('stream');

const upperCaseTr = new Transform({

transform(chunk, encoding, callback) {

this.push(chunk.toString().toUpperCase());

callback();

}

});

readableStream.pipe(upperCaseTr).pipe(writableStream);

Handling Stream Events

Streams emit events that can be used to handle various aspects of the data flow, like the following:

'data': when data is available to read'end': when there is no more data to read'error': when there is an error'finish': when all data has been flushed to the underlying system

Here’s an example of handling stream events:

const fs = require('fs');

const readableStream = fs.createReadStream('input.txt');

const writableStream = fs.createWriteStream('output.txt');

readableStream.on('data', (chunk) => {

console.log('New chunk received.');

writableStream.write(chunk);

});

readableStream.on('end', () => {

writableStream.end();

console.log('No more data to read.');

});

writableStream.on('finish', () => {

console.log('All data written.');

});

readableStream.on('error', (err) => {

console.error('An error occurred:', err);

});

writableStream.on('error', (err) => {

console.error('Error writing file:', err);

});

Conclusion

To sum up, Node.js streams are powerful tools for processing data in an efficient and scalable manner. By understanding and utilizing the different types of streams—readable, writable, duplex, and transform—you can handle large and complex data flows within your Node.js applications. Remember, streams allow you to process data piece by piece without keeping it all in memory, making your applications more performant and responsive.

We covered creating readable and writable streams, piping between streams, transforming streams, and handling stream events. With these concepts and examples, you should be well equipped to start working with Node.js streams in your own projects.

With practice and exploration, streams can be a powerful addition to your Node.js toolkit. Happy coding!