Caching can greatly enhance the performance of a web application by reducing load times and minimizing network traffic between the server and the database. In the context of a Node.js and Express.js application, there are several viable caching strategies that one might implement. Each strategy serves a specific use case and can be beneficial depending on the needs of your application. In this article, we will explore three popular caching solutions and guide you through their implementation process.

Table of Contents

In-Memory Caching

In-Memory caching stores data directly within the runtime memory of the Node.js process. It’s incredibly fast because it avoids the overhead of a network or disk I/O when fetching cached data.

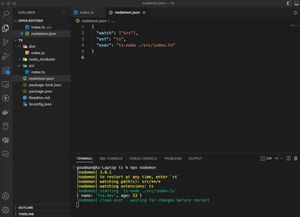

- Step 1: Install the necessary npm package, such as ‘node-cache’, via npm.

- Step 2: Initialize the cache object in your application.

- Step 3: Create middleware to check the cache before processing a standard request.

- Step 4: Set cache entries when the data is first fetched and retrieved from the cache on subsequent requests.

- Step 5: Set an appropriate expiration time for cache entries.

const NodeCache = require('node-cache');

const myCache = new NodeCache();

function cacheMiddleware(req, res, next) {

const key = req.originalUrl;

const cachedResponse = myCache.get(key);

if (cachedResponse) {

res.send(cachedResponse);

} else {

next();

}

}

function someResourceHandler(req, res) {

const key = req.originalUrl;

// Check if data is already cached

const cachedData = myCache.get(key);

if (cachedData) {

res.send(cachedData);

} else {

// Fetch data from database/source

const data = fetchData();

// Cache it with a timeout (TTL in seconds)

myCache.set(key, data, 3600);

res.send(data);

}

}

// Assuming 'app' is an instance of express

const express = require('express');

const app = express();

// Use the cache middleware

app.use(cacheMiddleware);

// Handle the resource fetch

app.get('/resource', someResourceHandler);

// Function to simulate data fetching

function fetchData() {

// Replace with actual data fetching logic

return { data: 'Sample data' };

}

// Start the server

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Pros: Rapid data access and easy implementation. Ideal for small to medium-sized datasets that do not require persistence after a server reboot.

Cons: Limited by the available system memory. Data is lost if the server restarts and horizontal scaling requires additional strategies such as distributed caches.

Redis Caching

Redis is an open-source, in-memory data structure store that can be used as a cache, database, or message broker. It’s popular due to its speed and versatility. Setting up Redis caching requires a running Redis server and integrating it with the Node.js/Express application.

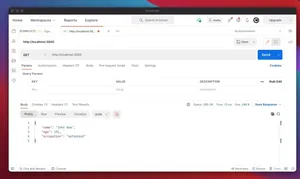

- Step 1: Install the ‘redis’ npm package and set up a Redis server.

- Step 2: Establish a connection to the Redis server in your application.

- Step 3: Create middleware similar to in-memory caching to intercept requests and return cached responses.

- Step 4: If data is not in the cache, fetch it, cache it, then return the response.

- Step 5: Configure a suitable expiration policy for cached items.

const redis = require('redis');

const express = require('express');

const app = express();

// Create a Redis client

const client = redis.createClient();

client.on('error', (err) => console.log('Redis Client Error', err));

// Connect to Redis

client.connect();

// Cache middleware

async function cacheMiddleware(req, res, next) {

const key = req.originalUrl;

try {

const cachedResponse = await client.get(key);

if (cachedResponse) {

res.send(JSON.parse(cachedResponse));

} else {

next();

}

} catch (err) {

console.error(err);

res.status(500).send('Server Error');

}

}

// Handler for a specific resource

async function someResourceHandler(req, res) {

const key = req.originalUrl;

try {

// Check for cached data

const cachedData = await client.get(key);

if (cachedData) {

return res.send(JSON.parse(cachedData));

}

// Fetch data from a hypothetical function

const data = await fetchData();

// Cache the data

await client.set(key, JSON.stringify(data), {

EX: 3600 // Set the expiry for 1 hour

});

res.send(data);

} catch (err) {

console.error(err);

res.status(500).send('Server Error');

}

}

// Use the cache middleware

app.use(cacheMiddleware);

// Handle the resource fetch

app.get('/resource', someResourceHandler);

// A mock function to simulate data fetching

async function fetchData() {

// Replace this with actual data fetching logic

return { data: 'Sample data' };

}

// Start the server

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Pros: Robust and persistent caching, suitable for larger datasets and distributed systems. High availability and scalable.

Cons: Requires a separate service to be running and managed. Adds network overhead as compared to in-memory caching.

File-Based Caching

File-based caching involves writing the cached data to the filesystem. It provides persistence over server restarts and can be shared across multiple server instances if stored in a shared location.

- Step 1: Choose a directory to serve as your cache storage location.

- Step 2: Write middleware to read the cache file before continuing with the request.

- Step 3: Write to the cache file after fetching data for the first time.

- Step 4: Implement a strategy for cache expiration and invalidation.

const fs = require('fs');

const express = require('express');

const app = express();

const cacheDirectory = './cache';

// Ensure cache directory exists

if (!fs.existsSync(cacheDirectory)) {

fs.mkdirSync(cacheDirectory);

}

// Function to get the cache file path

function getCacheFilePath(key) {

return `${cacheDirectory}/${key.replace(/\//g, '_')}.json`;

}

// Cache middleware

function cacheMiddleware(req, res, next) {

const cacheFile = getCacheFilePath(req.originalUrl);

if (fs.existsSync(cacheFile)) {

try {

const cachedResponse = fs.readFileSync(cacheFile, 'utf-8');

res.send(JSON.parse(cachedResponse));

} catch (err) {

console.error(err);

res.status(500).send('Server Error');

}

} else {

next();

}

}

// Handler for a specific resource

function someResourceHandler(req, res) {

const cacheFile = getCacheFilePath(req.originalUrl);

try {

// Check if data is already cached

if (fs.existsSync(cacheFile)) {

const cachedData = fs.readFileSync(cacheFile, 'utf-8');

return res.send(JSON.parse(cachedData));

}

// Fetch data from a hypothetical function

const data = fetchData();

// Cache the data

fs.writeFileSync(cacheFile, JSON.stringify(data), 'utf-8');

res.send(data);

} catch (err) {

console.error(err);

res.status(500).send('Server Error');

}

}

// Use the cache middleware

app.use(cacheMiddleware);

// Handle the resource fetch

app.get('/resource', someResourceHandler);

// A mock function to simulate data fetching

function fetchData() {

// Replace this with actual data fetching logic

return { data: 'Sample data' };

}

// Start the server

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Pros: Simple implementation and persistence across server reboots. Can be used with a shared filesystem in distributed environments.

Cons: Slower than in-memory solutions due to file I/O. Cache management and invalidation can be more complex.

Conclusion

Caching is a powerful way to speed up data retrieval in a Node.js and Express.js application, improving the overall user experience. The caching strategy chosen should be in line with the application’s requirements, scalability needs, and available resources. While in-memory caching provides the fastest access times, solutions like Redis offer greater scalability and persistence. File-based caching can be useful when persistence is a priority and resources are limited. By using one or a combination of these methods, developers can significantly reduce latency and server load, leading to a more responsive and efficient application.