Overview

In the modern development ecosystem, populating databases, creating mock data, or running stress tests are tasks that often require a comprehensive set of fake data. Faker.js, a popular library for generating massive amounts of fake data, joined hands with TypeScript, enables developers to ensure type safety alongside scalability. This guide brings you closer to Faker.js within a TypeScript environment, unveiling practical implementations, type definitions, and examples.

Introducing Faker.js

Faker.js is a library that allows the creation of massive amounts of realistic fake data for various purposes such as testing, bootstrapping databases, and more. When used in conjunction with TypeScript, it provides the added advantage of type-checking, ensuring that your fake data adheres to your data models.

Setting Up Your TypeScript Project

To start, ensure you have Node.js and npm (Node Package Manager) installed. Then, initialize a new TypeScript project:

$ mkdir my-faker-project

$ cd my-faker-project

$ npm init -y

$ npm install typescript --save-dev

$ npx tsc --initInstall Faker.js:

$ npm install @faker-js/fakerOnce installed, you can use Faker.js by importing it into your TypeScript files.

Basic Usage of Faker.js with TypeScript

Create a file named userGenerator.ts and add the following:

import { faker } from '@faker-js/faker';

interface User {

id: number;

firstName: string;

lastName: string;

email: string;

}

const generateUser = (): User => ({

id: faker.datatype.number(),

firstName: faker.name.firstName(),

lastName: faker.name.lastName(),

email: faker.internet.email(),

});

console.log(generateUser());This simple example demonstrates how to generate a mocked user object with a structure defined by the User interface, using Faker.js.

Advanced Faker.js Techniques in TypeScript

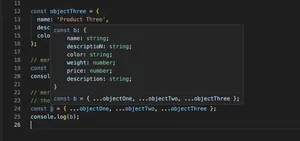

For more advanced scenarios, such as generating arrays of data, customizing data generation based on specific rules, or integrating Faker.js data into automated tests, TypeScript’s flexibility combined with Faker.js’s broad feature set becomes incredibly powerful.

For example, to create an array of 100 users:

const users: User[] = Array.from({ length: 100 }, () => generateUser());

console.log(users);To tailor the generation of fake data, you might want to customize your generateUser function, perhaps adding conditionals or loops that reflect more complex logic.

Integrating Faker.js Data into Automated Tests

Using Faker.js data in automated tests can drastically reduce the effort in test preparation. Consider a scenario where you need to test a user registration system. With Faker.js, you can generate a new user for each test run:

import { faker } from '@faker-js/faker';

import { registerUser } from './userRegistration';

describe('User Registration', () => {

it('should register a new user', async () => {

const fakeUser = generateUser();

const result = await registerUser(fakeUser);

expect(result).toBeTruthy(); // Or any other assertion

});

});This approach ensures that your tests don’t depend on a static set of data and can handle a variety of data inputs.

Customizing Faker.js

Faker.js is not only vast but also customizable. You can extend its capabilities or even locale settings to match your application’s needs. For instance, generating data with specific locale influences:

faker.locale = 'de'; // Sets the locale to German

// Generate a user with German-influenced data

const germanUser = generateUser();

console.log(germanUser);This customization allows generating data that matches the cultural context of the application’s target audience.

Conclusion

Integrating Faker.js with TypeScript brings together the best of both worlds: the powerful data generation capabilities of Faker.js and the strict type-checking of TypeScript. This combination ensures that not only is your mock or test data realistic and diverse, but it also adheres strictly to your data models, making your development, testing, and quality assurance processes more efficient and reliable.