Rate limiting is a critical feature for both securing and ensuring fair use of APIs. In an Express application, rate limiting can prevent abuse and help services to remain responsive. Below, we explore three different solutions for implementing rate limiting in Node.js with Express.

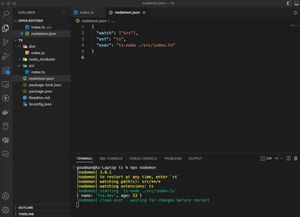

Solution 1: Using Express-rate-limit

TL;DR: express-rate-limit is a middleware for limiting repeated requests to public APIs and/or endpoints such as password reset.

- Install express-rate-limit package using npm or yarn.

- Import express-rate-limit and apply it to your Express application or specific routes.

- Configure the middleware by setting properties such as windowMs and max to control the rate limit.

- Optionally, customize the message or behavior when the rate limit is reached.

const express = require('express');

const rateLimit = require('express-rate-limit');

const app = express();

// Configure the rate limiter

const limiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes in milliseconds

max: 100, // Limit each IP to 100 requests per window (15 minutes)

message: 'Too many requests from this IP, please try again after 15 minutes'

});

// Apply the rate limiter middleware to all requests

app.use(limiter);

// Route to handle GET requests to the root URL

app.get('/', (req, res) => {

res.send('Hello World!');

});

// Start the server on port 3000

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});This code sets up a basic Express server with a rate-limiting middleware that restricts the number of requests an individual IP address can make in a 15-minute window, enhancing the server’s resilience against potential abuse or excessive traffic.

Pros: Easy to use; Configurable; Minimum overhead.

Cons: Basic; Not distributed; May not suffice for larger applications with high traffic or complex requirements.

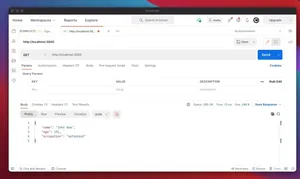

Solution 2: Using Redis Store with express-rate-limit

Summary: Combining express-rate-limit with a Redis store enhances the scalability and performance of rate limiting by using an external data store to maintain count of requests.

- Set up a Redis server and install redis and rate-limit-redis packages.

- Configure express-rate-limit to use Redis store for maintaining the state of IP request counts.

- Apply the middleware as in the basic express-rate-limit usage.

const express = require('express');

const rateLimit = require('express-rate-limit');

const RedisStore = require('rate-limit-redis');

const redis = require('redis');

const app = express();

// Create a Redis client

const redisClient = redis.createClient({

// Include Redis configuration here if needed (e.g., host, port)

});

// Handle Redis client errors

redisClient.on('error', (err) => console.log('Redis Client Error', err));

// Configure the rate limiter to use Redis store

const limiter = rateLimit({

store: new RedisStore({

client: redisClient

}),

windowMs: 15 * 60 * 1000, // 15 minutes in milliseconds

max: 100, // Limit each IP to 100 requests per window

message: 'Too many requests, please try again later.'

});

// Apply the rate limiter middleware to all requests

app.use(limiter);

// Route to handle GET requests to the root URL

app.get('/', (req, res) => {

res.send('Hello World!');

});

// Start the server on port 3000

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Pros: Scalable; Suitable for distributed systems; Persists rate limit counters.

Cons: Requires Redis setup; Adds complexity; Additional dependency.

Solution 3: Custom Rate Limiter

Building a custom rate limiting middleware provides full control over the rate limiting logic and storage mechanism.

- Decide on a data structure or store to hold request counts and timestamps.

- Create middleware that updates and checks the data as per the rate limit before proceeding to next routes.

- Add error handling to respond with a rate limit exceeded message.

const express = require('express');

const app = express();

// Rate limiting settings

const rateLimits = {};

const MAX_REQUESTS = 100;

const WINDOW_MS = 15 * 60 * 1000; // 15 minutes

// Rate limiter middleware

const rateLimiter = (req, res, next) => {

const ip = req.ip;

const now = Date.now();

if (!rateLimits[ip]) {

rateLimits[ip] = { requests: 1, startTime: now };

return next();

}

const deltaTime = now - rateLimits[ip].startTime;

if (deltaTime < WINDOW_MS) {

rateLimits[ip].requests++;

if (rateLimits[ip].requests > MAX_REQUESTS) {

return res.status(429).json({ message: 'Too many requests. Please try again later.' });

}

return next();

}

// Reset rate limit for IP after window passes

rateLimits[ip] = { requests: 1, startTime: now };

next();

};

// Apply the rate limiter to all requests

app.use(rateLimiter);

// Route to handle GET requests to the root URL

app.get('/', (req, res) => {

res.send('Hello World!');

});

// Start the server

const PORT = 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Pros: Maximum flexibility; No third-party dependencies; Can be tailored to specific requirements.

Cons: More complex to implement; Requires careful testing; May lack optimizations of established solutions.

Conclusion

Rate limiting is essential for protecting your Express applications from abuse and overload. There are various methods available, ranging from simple middleware like express-rate-limit to more complex solutions like implementing a custom rate limiter or using Redis for a distributed system. Each approach comes with its trade-offs concerning simplicity, flexibility, scalability, and overhead. Assessing the specific needs of your application, such as traffic patterns and the environment it runs in, will help you choose the most appropriate rate-limiting strategy.